In an unexpected twist, OpenAI dropped its first open models in six years. Two, in fact.

In addition to the flagship 120B model (gpt-oss:120b), Sam Altman and team also packaged up an efficient small language model version (gpt-oss:20b).

This unexpected gift to the AI community comes with Apache 2.0 licensing, opening doors for developers, researchers, and organizations worldwide to build upon these foundations without restrictions.

The larger scale version can fit entirely in one NVIDIA H100 (80GB RAM) while, even more importantly, the Small Language Model (SML) can work in consumer hardware, including, as OpenAI notes, even a high-end laptop.

Testing the New gpt-oss Models

Everyone has been scrambling over the past 24 hours to answer the question: How well do these new models perform?

That’s important, because the open nature (Apache 2.0 license) of these models means anyone—from startups to students—can embed them in real-world applications. Think: local RAG systems for law, medicine, real estate, education. Or training custom LoRAs for niche industries and orgs without needing API access or signing their soul to a SaaS vendor.

Most of us don’t own an H100 which is the domain of enterprise customers (about $30,000 per H100 card). So that means testing the new large language model is beyond my capability. However, the 20B is perhaps the Goldilocks version. Just right. It can run on most well-equipped home servers (high-end laptops might be a stretch unless they are really well-spec’d). You’ll want a beefy NVIDIA GPU with as much ram as possible.

Real-World Testing: Can Consumer Hardware Handle Advanced AI?

For my tests I used a modest, and admittedly aging, Windows desktop PC.

I think this is a reasonable way to test these new models, as not everyone is going to be running the latest and greatest hardware. Non-profits, social service-related organizations, and educational institutions are likely examples of those running on constrained budgets.

This configuration represents a reasonable middle ground – not cutting-edge, but certainly capable. It’s the same system I use for 4K video editing and other creative work, making it a practical testbed for real-world AI deployment.

For testing, I wasn’t so interested in synthetic benchmarks. OpenAI has already published those benchmarks for gpt-oss, and they include the increasingly standard tests: Codeforces Competition code, Humanity’s Last Exam, HealthBench, HealthBench Hard, AIME 2024 (tools), AIME 2025 (tools), GPQA Diamond, MMLU, Tau-Bench Retail, AIME Competition math, and GPQA Diamond (tools). A lot of acronyms basically to say: gpt-oss compares favorably to OpenAI’s marquee and well-known cloud models including o3, o4-mini and o3-mini.

Why MoE Matters (and What It Means IRL)

OpenAPI notes that both models use a Transformer that “leverages mixture-of-experts (MoE) to reduce the number of active parameters needed to process input.” What does that mean in practical terms? It means the models respond faster to prompts. Because the model intelligently determines a sub-set of the entire parameter base, there’s less information to process. We’re seeing this as an increasingly common architecture across cutting edge LLMs (mixtral, for instance). Consequently, these models don’t require as much compute if they had not employed MoE.

Think of it as a well-organized company where specific departments handle relevant tasks, rather than involving everyone in every decision. This efficiency makes it possible to run sophisticated models on more modest hardware.

So I’m most interested in just using the new models, and comparing prompts with typical everyday sort of scenarios. Most importantly: oss-gpt:20b vs. ChatGPT o4. The latter a paid model ($20, $100, $200+ per month depending on usage).

Could an open-weight model compete with its larger, famous sibling? And running, no less, in a private home server environment with an aging, but still relatively performant computer hardware (typically used for video editing).

Head-to-Head: oss-gpt:20b vs GPT-4o

Prompt 1: Random prompt I made up

How do people buy an H100 card?

GPT 4o:

oss-gpt:20B:

Short answerThe Nvidia H100 Tensor‑Core GPU is a data‑center‑only chip.If you’re a company that needs it for training or inference workloads, you buy it through:

1. What is an H100?

- Form factors – PCI‑Express (PCIe) and SXM‑4 (high‑bandwidth, in‑server).

- Typical price – ~$15 000–$20 000+ per GPU, depending on configuration and quantity.

- Target market – AI/ML research labs, enterprises, cloud providers, large‑scale HPC workloads.

- Availability – High demand, supply constraints; often on a wait‑list or a 3–6 month lead time.

2. Buying it for your own infrastructure

Step 1: Identify the requirement

- Workload – Training large language models, inference, scientific simulations, etc.

- Platform – Do you need a GPU‑optimized server (SXM‑4) or a PCIe card that fits a standard rack?

- Scale – One card, a rack of 8, or a data‑center deployment?

- Budget – Remember that you also need a compatible chassis, power supply, networking, cooling, and software licensing.

Step 2: Contact Nvidia Enterprise Sales

- Visit the Nvidia Enterprise site – fill out a contact form or call the sales team.

- Provide details – your organization type, use case, expected order volume, and timeline.

- Receive a quote – usually includes pricing, shipping, support, and licensing terms.

- Sign the agreement – a commercial contract that covers compliance, export controls, and service levels.

Tip: If you’re buying in bulk (10+ GPUs) you might negotiate volume discounts or a lease‑to‑own program.

Step 3: Place the order and delivery

- Payment – usually net‑30 or 60 days after delivery.

- Shipping – H100s are subject to export controls (ITAR, EAR), so you’ll need to provide shipping/consignee details and may have to sign an export‑control statement.

- Receiving – The card will be shipped in a secure, tamper‑evident packaging.

Step 4: Integration & support

- Hardware – Install the card in your server or rack. Verify that firmware and BIOS support the SXM‑4/PCIe interface.

- Software – Install the latest Nvidia driver, CUDA toolkit, and any AI/ML frameworks.

- Support – You’ll have a 1‑year warranty and access to Nvidia’s technical support via the Enterprise portal.

3. Buying it via OEM / Platform Partners

If you don’t want to assemble a server yourself, order an H100‑ready system:

Process:

- Choose a chassis that supports H100 (check SXM‑4 compatibility).

- Configure the CPU, memory, networking, and storage.

- Add the H100(s).

- Place the order; the OEM handles logistics and warranty.

4. Renting or Using in the Cloud

Because the upfront cost and maintenance burden are high, many organizations rent H100s from cloud providers:

Advantages:

- Zero upfront cost – pay per second or per hour.

- No maintenance – the provider handles cooling, power, firmware, and driver updates.

- Scalable – add or remove GPUs on demand.

Considerations:

- Long‑running workloads can become expensive; compute‑optimized pricing and spot instances can help.

- Some providers charge higher rates for H100 than for A100 due to its novelty.

5. Buying Used or “Resold” H100s

Warning: The used market is fraught with risk.

- No warranty – many sellers are individuals or small shops.

- Possible counterfeit – counterfeit GPUs can appear very similar to genuine ones.

- Exclusion from support – Nvidia won’t service or update a used card unless you have a valid warranty.

- Legal compliance – Export controls apply to used GPUs; the seller must have export authorization.

If you decide to explore this route, look for reputable resellers that specialize in GPU sales, such as:

- Octo (Octo.com) – offers refurbished GPUs with a 30‑day warranty.

- GPU‑Cloud – specializes in H100/H200 inventory.

- Serverless – sells used GPU racks (but verify each card individually).

How to verify:

- Run a fingerprinting tool (e.g.,

nvidia-smi -L+nvidia-smi -q) and check the serial number against Nvidia’s database.- Run stress tests (e.g.,

jetson_inferenceorstress-ng) to check thermal stability.- Verify that the driver and firmware are up to date.

6. Summary Checklist

Final Thought

The Nvidia H100 is not a consumer GPU. It’s a high‑performance, high‑cost data‑center accelerator. Buying one means committing to a structured procurement process, export‑control compliance, and long‑term support. For most organizations, the simplest path is to buy through Nvidia’s Enterprise channel or a reputable OEM, and to consider cloud renting for short‑term or burst workloads. If you’re a research group or startup with limited capital, start with a smaller, lower‑power GPU (like the A100 or A30) and scale to the H100 when the workload truly demands it.

Prompt 2: Reasoning & Logic

You have a basket with 10 apples. You give 3 to Alice, 2 to Bob, and eat 1. How many are left, and who has the most?

GPT 4o:

Let’s break it down:

Start: 10 apples

Give to Alice: -3 apples → you now have 7

Give to Bob: -2 apples → you now have 5

Eat 1: -1 apple → you now have 4 apples left

Final count:

You: 4 apples (the uneaten ones still in your basket)

Alice: 3 apples

Bob: 2 apples

Eaten: 1 apple (gone)

Who has the most?

You do, with 4 apples left in the basket.

🧺🍎🍎🍎🍎 (the survivors)

oss-gpt:20B:

You started with 10 apples.

- Give 3 to Alice → 7 left

- Give 2 to Bob → 5 left

- Eat 1 → 4 left

Left in the basket: 4 apples.

Who has the most? Alice, with 3 apples (you have 1, Bob has 2).

Prompt 3: Natural Language Understanding

Summarize this short passage in one sentence:

“Despite the roaring storm outside, she felt calm, almost serene, as if the chaos mirrored her own internal peace.”

GPt 4o:

She felt a deep sense of calm, her inner peace reflected in the storm’s external chaos.

oss-gpt:20B:

She stayed calm and almost serene amid the roaring storm, feeling its chaos mirrored her own inner peace.

Prompt 4: Creativity & Style Transfer

Rewrite this sentence in the style of Ernest Hemingway:

“She walked into the room and noticed how quiet it was.”

GPt 4o:

She walked in. The room was quiet. She felt it before she heard it.

oss-gpt:20B:

She walked in. The room was quiet.

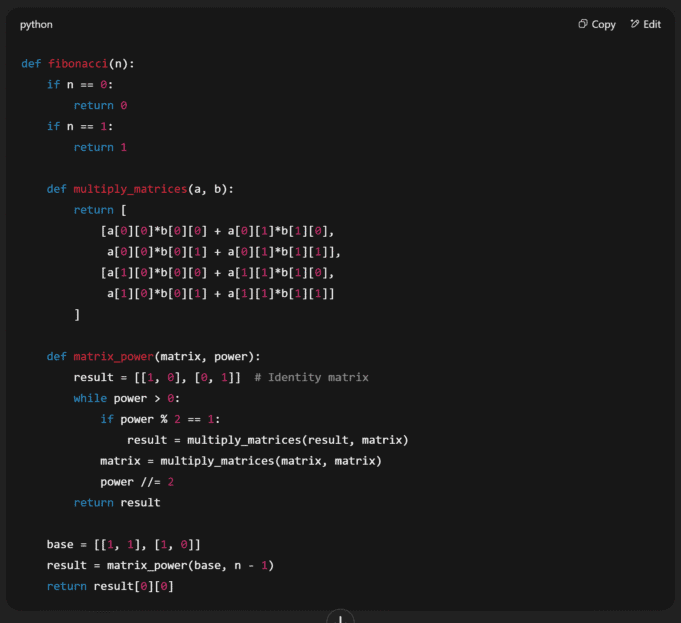

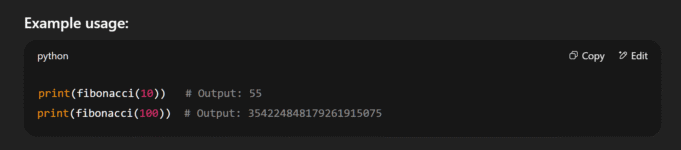

Prompt 5: Code Generation (Python)

Write a Python function to return the nth Fibonacci number. Optimize it for performance.

GPT 4o:

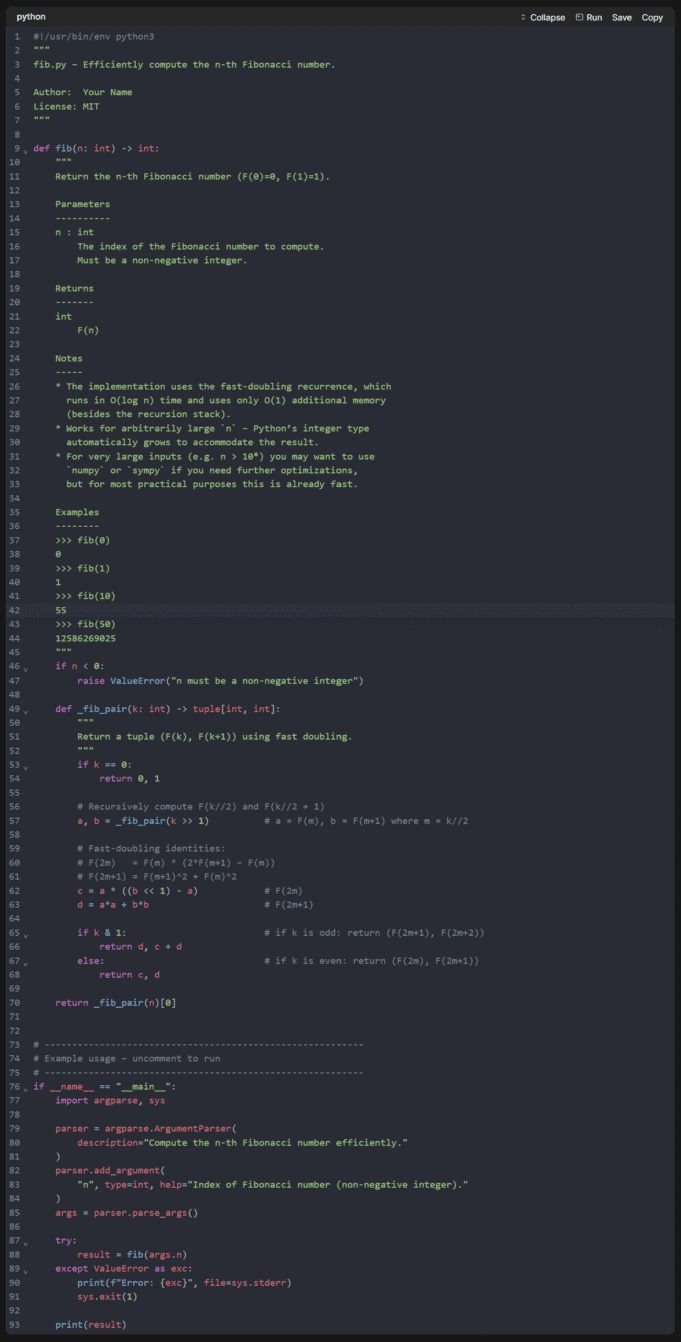

For high performance, the best approach to calculate the nth Fibonacci number is using matrix exponentiation, which gives you O(log n) time complexity.

Here’s a Python implementation optimized for performance:

Why this is fast:

Avoids recursion (which can overflow for large

n)No repeated computation

Scales logarithmically with

nLet me know if you’d like a version using

@lru_cachefor memoization or an iterative DP approach instead.

oss-gpt:20B:

Below is a single‑file, self‑contained implementation that returns the n‑th Fibonacci number in O(log n) time and O(1) extra space (apart from the recursion stack, which is onlylog ndeep).The algorithm is the classic fast‑doubling method, which is both fast and numerically exact for arbitrarily large integers (Python’s built‑in

inttype grows as needed).

Prompt 6: Retrieval-style Q&A / Fact Recall

What is the capital of Estonia? Provide one historical fact about the city.

GPT 4o:

The capital of Estonia is Tallinn.

Historical Fact:

Tallinn’s Old Town is one of the best-preserved medieval cities in Europe and is a UNESCO World Heritage Site. The city’s fortifications date back to the 13th century, when it was a major trading hub in the Hanseatic League. 🏰

oss-gpt:20B:

Tallinn is the capital of Estonia.

Historical fact: It was granted city rights by King Eric I of Denmark in 1219, making it one of the earliest documented towns in the Baltic region.

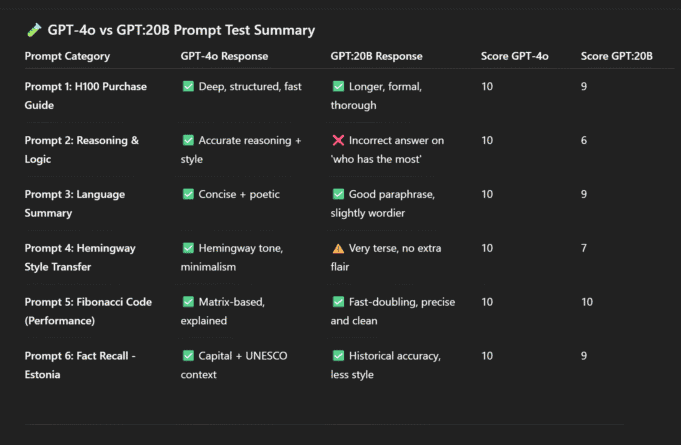

Prompt Highlights

- Code generation: Both models nailed a performant Fibonacci implementation. gpt-oss used fast-doubling; GPT-4o leaned on matrix exponentiation.

- Creative writing: GPT-4o showed more flair (and emojis), while gpt-oss felt stiff.

- Logic: gpt-oss tripped on a simple apple-counting problem. Not a dealbreaker, but worth noting.

- Fact recall: Both knew Tallinn is Estonia’s capital. gpt-oss even dropped a nice historical nugget from 1219.

Prompt Scorecard

(via GPT 4o)

Performance Considerations and Practical Applications

Running gpt-oss:20b on consumer hardware requires patience. Response generation averaged 2-3 tokens per second on my setup – functional, but far from the instantaneous responses we’ve grown accustomed to with cloud services. However, this trade-off brings significant advantages:

- Complete data privacy: Everything runs locally

- No usage limits: Generate as much content as needed

- Customization potential: Fine-tune for specific domains

- Cost predictability: One-time hardware investment

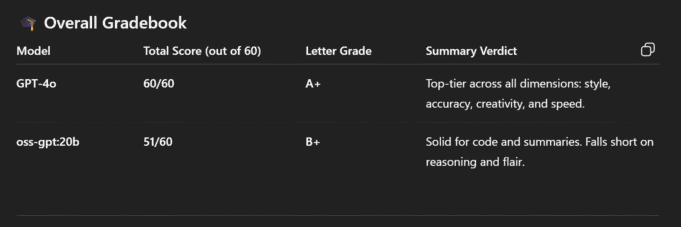

Thoughts and Verdict: Not Quite a Giant Slayer… But Close

- GPT-4o A+

- oss-gpt:20b B+

Looking Forward: The Democratization of AI

As for the results in this limited, non-scientific test?

GPT 4o wins.

gpt-oss:20b, however, is likely the best overall small language model now available in the open market. Other models may still be useful for special scenarios such as coding, medial and financial, etc. But, for an overall chatbot I found this new model to be consistent in providing results that, while not quite on par with the cloud AI models such as Claude Opus/Sonnet and ChatGPT 4o/o4-mini/o4-mini-high, I did find it pretty close — which is in itself amazing. To think how far these models have some in such a short time!

oss-gpt:20b? It’s yours to run, remix, and integrate. No strings attached.

Despite a few slips in reasoning and tone, it’s shockingly good for a model you can spin up in Docker.

I have to tip my hat to ChatGPT though: It is still the King of Emojis. It just loves them. Em-dashes too. Can’t get enough!

Final Thoughts

The era of local LLMs just got a major upgrade.

Expect to see this model — and variations of it — showing up everywhere: in IDEs, research labs, smart assistants, and maybe even embedded in your espresso machine (don’t rule it out). Maybe even StarkMind and upcoming Vertigo projects?

As someone who’s watched the AI landscape evolve rapidly over the past few years, this feels like a watershed moment. The gap between open and closed models is narrowing, and that’s good news for innovation, education, and equitable access to transformative technology.

The open future of AI just got a whole lot more interesting.

You can explore OpenAI’s open-weight models on Hugging Face:

- gpt-oss-120b — for production, general purpose, high reasoning use cases that fit into a single H100 GPU (117B parameters with 5.1B active parameters)

- gpt-oss-20b — for lower latency, and local or specialized use cases (21B parameters with 3.6B active parameters)