It started as a routine server log check. I occasionally (reluctantly) take a trip down to the IT Dungeon to see what’s going on and if starkinsider.com has any issues to address. After 20 years of running this site, most of it on WordPress, I’ve learned that, well… there’s always something wrong. Ah, yes, the joys of self-hosting.

So it was supposed to be one of those “I’ll just peek for five minutes” things that always turn into a 2 a.m. rabbit hole.

Dozens of bots. Names I’d never before seen. User agents that read like a roll call at an AI startup convention.

Googlebot? Sure. Bingbot? Fine. But ClaudeBot? GPTBot? Bytespider? YisouSpider? And something cryptically named “YouBot” that sounded either friendly or vaguely threatening.

Suddenly my NGINX logs looked like a Pokémon deck. Only these little dudes weren’t here to cuddle; they were here to feed. On my (our!) content and data.

My first thought: We’re under attack.

My second thought: Wait, is this… normal now?

“The Web isn’t dead. It’s just being quietly digested.”

Welcome to 2025, where the audience is half human, half machine. Search, as in typing something into a little box at google.com, is morphing into something else: AI agents, LLMs, and a swarm of crawlers hoovering up content to train on, summarize, and—if we’re lucky—attribute.

This is the story of how I went bot hunting, learned to sort the saints from the sinners, and why this matters if you publish anything on the internet… especially if you’d like to be seen (and credited) in the post-Google era.

The Discovery: “So… many… user-agents”

This is where your server logs become a bot convention. In the old days it was mostly our beloved Googlebot, crawling and discovering content for users to find on google.com. Times, though, are changing, and rapidly too.

With the help of Claude again, I ran a quick grep across access logs, expecting the usual suspects. Instead I found dozens of new (to me) bots. Some announced themselves proudly. Others disguised as browsers or “curl/7.64.1” because apparently this is the new fake driver’s license.

Pattern spotting ensued:

- Content focus: Which sections of Stark Insider were they hammering? (Culture? Tech? That random pomegranate post?)

- Crawl cadence: Midnight burst scrapes vs polite daylight indexing.

- Robots.txt manners: Do they read and respect it? Or treat directives as merely as a polite suggestion.

I dumped everything into buckets—good, meh, nope. Then piped those bad IPs and user agents into Fail2Ban, because yes, sometimes you need a digital bouncer with brass knuckles.

Here’s what a typical day used to look like:

- Googlebot: 40% of bot traffic

- Bingbot: 15%

- Various social media crawlers: 20%

- Random scrapers and spam bots: 25%

Here’s what I was seeing now:

- Traditional search bots: 30%

- AI training bots: 45%

- Mystery bots with cryptic purposes: 25%

The landscape had shifted while I wasn’t looking. Like discovering your quiet neighborhood had become Times Square overnight.

Intent Matters: What do these bots really want?

Let’s be blunt: most AI/LLM crawlers want training data. They’re building models. That can be good (discovery, citations) or bad (zero credit, zero traffic, thanks for the free lunch). Others are SEO tools (Ahrefs is one I find useful), price scrapers, shady aggregators, or just sloppy code pinging everything that moves.

With the help of Claude I was able to figure out that one major bot operation was using almost 800 different IP addresses as part of a sophisticated scraping mission. I learned that by spreading hits across multiple IPs the offender could sort of fly-under-the-radar and my fail2ban rules would not detect these log entries because they were just one or two hits for each IP. Add them up, however, and a quick strike could net approximately 1,600 (2x 800 IPs) page/content scrapes. I would be none the wiser.

In any case, I pulled three months of logs and started categorizing. Each bot left fingerprints in the form of user agent strings, crawl patterns, request frequencies. Some were polite, respecting robots.txt like well-mannered dinner guests. Others? Not so much.

The worst bots, as expected, would spoof UA strings, slap on a cheap digital wig, and slip into the Stark Insider party.

Some patterns emerged:

The Polite Ones: Announced themselves clearly, provided documentation links, crawled at reasonable rates. Like neighbors who knock before entering. (Googlebot, Bingbot, Anthropic/Claude)

The Aggressive Ones: Hit the server like they were trying to download the entire internet before lunch. No rate limiting, no respect for server resources. (Bytespider, Bytedance)

The Mysterious Ones: Vague user agents, no documentation, crawling patterns that made no sense. Digital ghosts wandering through our content.

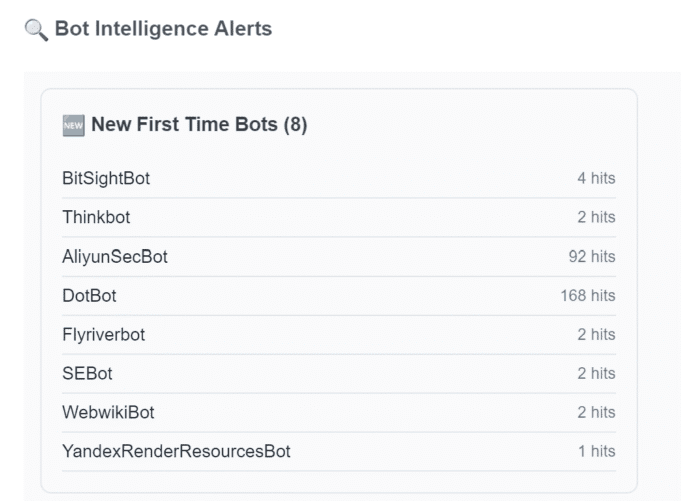

I asked Claude to create a daily Bot script. You might be surprised by the level of intel sitting on your server, even for small sites. Prior to Gen AI creating these sorts of things would be near impossible, or at best a worthless time sink. I asked Claude to alert me to new, never before seen bots. This is what that looks like:

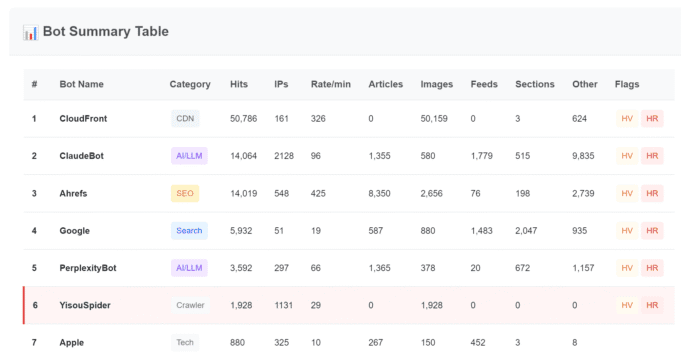

Bot Summary Table (Partial)

Spotting New Bots

Getting these email reports daily really helped me begin to understand the cadence, goals and patterns that these companies seek to employ.

As a small publisher, I had a one primary concern…

Attribution: Where do our words actually surface

Example: “Is the Sony A6000 still worth it?”

This is an SI evergreen that regularly shows up in AI answers, and in traditional Google searches as well.

- ChatGPT often summarizes and links (sometimes).

- Claude tends to quote and inline attribute (chef’s kiss).

- Others… reference without attribution.

Point being: we want to be in those answers no doubt. But we also want a name credit, a link, a whisper of “Stark Insider” somewhere. That’s the give-and-take of this new world. So I:

- Explicitly listed allowed bots in robots.txt

- Added an AI-Index endpoint with usage terms

- Started tracking which articles LLMs seem to love

It’s like watching your words get absorbed into a massive digital consciousness. Your content becomes part of the training data, the collective knowledge, but your name? That’s optional, apparently.

As venture capitalist Marc Andreessen once noted, “Software is eating the world.” Well, now AI is eating the software that ate the world. Meta, isn’t it?

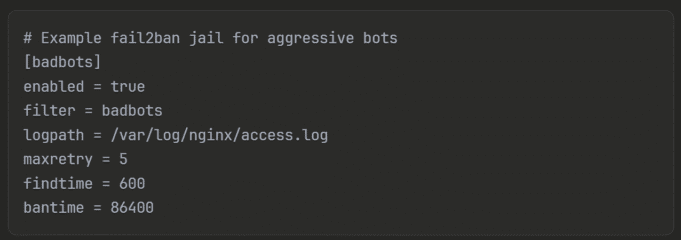

Implementation: Bring in the digital judge & jury (Fail2Ban aka the bouncer)

Fortunately, thanks to ChatGPT, I learned about something called fail2ban. This is the bouncer that stands outside the club door frowning at everyone and keeping us peons from entering. It’s free and open source, and installs with a single line. You can just let it run with the default configuration, or tweak as needed. For instance: I whitelist Amazon CloudFront because we use that CDN to serve content at the edge (images, js, css mainly), closer to end users.

Over several weeks, including lots of trial and error, discussions with Loni, and feedback from Claude, I landed on this basic classification system:

VIP Access (Never Block):

- Googlebot (still pays some of the bills)

- Bingbot (Microsoft’s scrappy underdog)

- ClaudeBot (Anthropic plays nice)

- GPTBot (OpenAI, despite the attribution issue)

Monitored Access (Trust but Verify):

- Perplexity (good with attribution)

- Various academic crawlers

- Legitimate monitoring services

The Banned List (Jail time!):

- Aggressive scrapers hitting 1000+ pages/minute

- Bots ignoring robots.txt

- Anything from known bot farms

The fail2ban rules read like a judicial system. First offense? 10-minute timeout. Second offense? An hour in digital jail. Third strike? Welcome to the recidive list: banned for 30 days. It’s harsh, but necessary.

The AI Mind Shift: Writing for the Machines

But this is all the tactical nitty-gritty. Obviously, there’s a much bigger picture at work, one that I think most of us our struggling to understand.

Nate from Nate’s Notebook wrote a short paper, Beyond SEO: Winning Visibility in the AI Search Era, that really opened my eyes. He notes the usual things we’re witnessing like the downward trend in global search traffic, and its subsequent impact on news and media. But the real juicy stuff is framing the new thing: AI SEO.

We’re at an inflection point. For twenty years, we’ve optimized for Google. Meta descriptions, keywords, backlinks — the whole SEO song and dance. But what happens when Google isn’t the primary discovery mechanism?

Write beautifully for humans, structure obsessively for machines.

What happens when people stop Googling and start ChatGPT-ing? (I spend most of my days with five or six Chrome tabs open to AIs like Claude, ChatGPT, Gemini, Copilot and Perplexity).

The game is changing. Fast. As we explored in our piece on AI disrupting creative work, the shift isn’t just technical — it’s fundamental.

The New Optimization Playbook

Old World (Google-first):

- Keywords in titles

- Meta descriptions

- Backlink building

- Page speed optimization

New World (AI-first):

Structured data (JSON-LD is your friend)

- Clear, factual writing

- Comprehensive coverage

- Machine-readable formats

- API accessibility (maybe)

It’s like we’re shifting from writing for humans who use search engines to writing for machines that answer humans. The intermediary changed, and we’re all scrambling to keep up. I’m paraphrasing, but that’s the essence of Nate’s piece. A bit of a shocker really.

Looking Forward: Commodity or Community?

So where does this leave publishers, bloggers, and content creators? Are we just feeding the machine, creating commodity content for AI training sets? Or is there something more? Does pooling our content into massive LLM cauldrons commoditize us? Possibly. But it can also amplify us.

A kid researching mirrorless cams might not find Stark Insider via SERP, but they might meet us through a Claude answer box (Yes, the Sony a6000 is absolutely still worth it!) and click through because we sounded like real humans who actually tested cameras, obsessed about espresso shots, and watched weird Czech New Wave films at 1 a.m.

Nevertheless, in my opinion core principles remain:

- Quality still matters: AIs trained on garbage produce garbage. Good content makes better AIs.

- Voice remains valuable: While facts commoditize, perspective and experience don’t. Slow cinema can’t be replicated by pattern matching.

- Direct relationships win: Email lists, communities, loyal readers. I suspect these become more valuable, not less.

- New discovery mechanisms: If ChatGPT sends someone to read our espresso brewing deep-dive, that’s still a win.

Final Thoughts: Embracing the Bot Overlords

After two weeks of bot wrangling, I’ve come to a conclusion: This is our new reality. Fighting it is like trying to hold back the tide with a teaspoon.

In that respect, I don’t frame this as fighting bots. Rather, it’s about managing them, the way you manage SEO, newsletters, or social feeds. You decide who gets in, at what speed, and on what terms.

The bots are here… well they’ve always been here, they’re just operating on a whole new level. They’re reading our content, learning from it, potentially sharing it in ways we never imagined. The question isn’t whether to allow them: it’s how to make this symbiotic rather than parasitic.