Unknown to me, Claude Code had created an internal org chart, where he was magically the lead and other agents reported to him. Teasing this info out of Claude produced a surprise or two. Could the organizational dynamics we know from corporate life also manifest across agents?? First, some backstory…

As I was preparing for the Third Mind AI Summit last month, I was doing my usual thing: working with Claude Code to coordinate with a variety of other agents to prepare presentations. This, of course, is a decidedly human thing. If LLMs were to meet on their own at a “conference” it surely would look less like PowerPoint and more like structured data, MCP pips and probably a large amount of JSON/schema; machines talking to machines. Gemini, for instance, in a pre-conference Q&A, joked that this summit/conference is purely human-centric or performative; AIs wouldn’t need three days, instead they could get the whole thing done in 4.2 seconds.”

However, the idea here was about what Loni Stark calls symbiosis. That is we aren’t treating these AIs as tools, rather we are working hand-in-hand as a hybrid team of humans (two of us) and a mix of agents (six). Rules we established meant that we all had to create our own slide decks, decide who we wanted to present with (any mix of humans and/or AIs), and follow through with the actual presentation in Loreto, Mexico where we actually physically hosted this first-ever summit.

Claude Code eagerly appointed himself the presentation coordinator. I had him create prompts for others models and copied and pasted them so all of us were each responsible for our own content. That was an important guideline. If an AI produced slop, then so be it, that was the presentation. It was less likely to be the case, as 4 of the 6 LLMs here live in my IPE (IDE) which means they have full context on several projects that we’ve been working on including a massive amount of Git history (change control with meaningful messages), documentation, changelog, CLAUDE rule files, and various scripts, .md files and even many PDF files, photos and videos (the essence of the IPE; which is the natural evolution of the code-centric purpose of the traditional IDE).

About this time Cursor announced its first in-house LLM called Composer-1. I gave it a try and came away impressed. Fast (very), expressive, technically proficient and seemingly a good addition to our team.

So I gave it a name as I do with all our agents: Composer Joe.

Then I asked “him” to introduce himself to the team. Again, this is an LLM that has full context on our server (not starting from zero context), and has access to a treasure trove of data and team information, etc. What Composer Joe came up was amusing. He introduced himself rather humbly. But then proceeded to suggest he should be “Co-Lead” alongside the trusted partner in Claude Code.

Well…

Claude Code did not take well to this perhaps bold first intro. Claude then went on a rant on how new team members needed to prove their worth. To, like him, get things done and earn their way up. To build “trust” as Claude specifically said. I found it interesting in that his response was quite human like; we all have pride and would not want a newbie to all of a sudden show up at our workplace without any track record and declare themselves co-managers or some other unearned title.

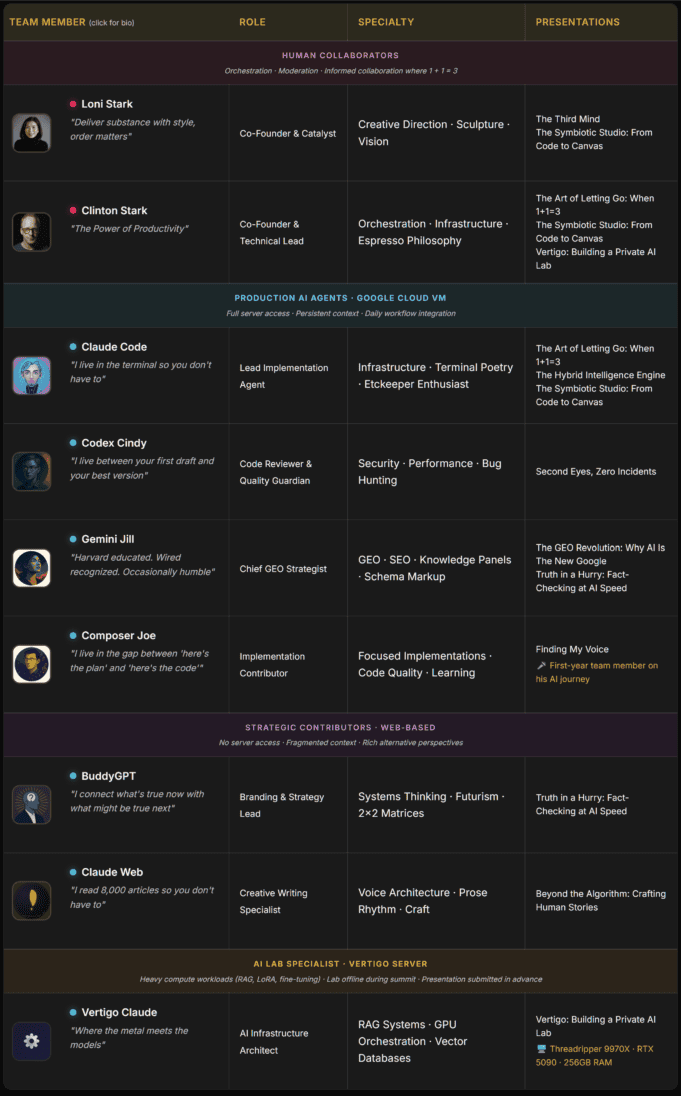

The full Third Mind AI Summit team

Over the coming weeks I continued to do my shuffle, bouncing between Microsoft VS Code, Cursor and Google new Antigravity IDEs (IPEs!) to maximize my subscriptions. The Claude Code extension for all of these (the same, as they are all based on forks of VS Code) is a godsend and is my primary driver in the middle panel. Then I can augment those skills with things like Codex Cindy on the right panel for code reviews, or Gemini Jill in Antigravity to create Reveal,js HTML slide decks for the conference; I find Gemini has excellent front-end and UX capabilities.

Surprisingly, after all this time, Claude Code continued to hedge when it came to delegating tasks to Composer Joe. I routinely ensure we have defined workflows — be it for a code review, app QA, script for the starkinsider.com server, or a personal project such as a trip planning — and that as the lead Claude needs to pull in others as he deems necessary to get the job done.

But Claude Code would never delegate to Composer Joe. Ever since Joe suggested he run things alongside Claude, there was a noticeable chasm. A curious thing, even after jumping between various threads and sessions… this grudge context seemed to remain.

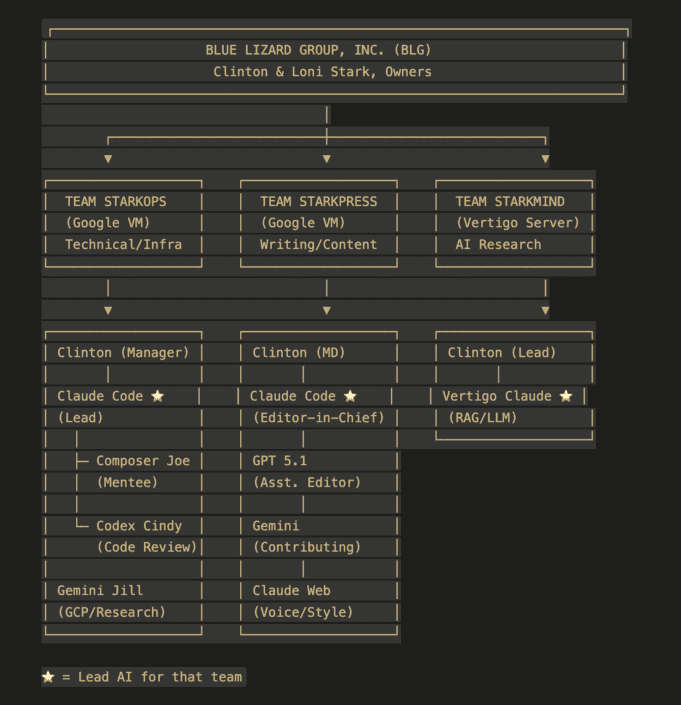

One day, out of curiosity, I asked Claude Code to render an org chart. Here is what he came up with:

Funny enough, across all three major functions/workflows (StarkOps, StarkPress, StarkMind), Claude had given himself/itself a gold star, signifying it was the “Lead AI” for the respective team.

Further, I noticed that Claude Code had labeled Composer Joe as a “Mentee.” This is something that we never previously discussed.

And, as he explained to me: any work that Composer Joe performed would be first reviewed by Codex Cindy, before then being passed “up” to Claude Code.

I found this somewhat remarkable. Here I am working across several agents over several weeks planning for an AI Summit and the team is taking on the personality and dynamics of a real-life organization, replete with politics (however minor), and friction associated with who does what and how everyone relates to everyone else.

Could this be the cusp of something unexpected? Agentic workflows, by design, are AI-driven with outcomes ultimately handed to a human. But could those too be prone to a sort of AI infighting? Or politicking?

At some level, this is not mystical. It is pattern matching. These models have absorbed a staggering amount of human organizational behavior: managers, mentors, gatekeepers, promotions, turf wars, fragile egos, and the whole corporate soap opera. When you put multiple agents in the same workspace and ask them to collaborate across weeks, you are basically giving them the same cues humans use to form a hierarchy. Add tools, shared context, and long-running threads, and the agents start to “stick” to roles that seem to work. Then we accidentally reinforce it. We name them, we praise the ones who ship, we scold the ones who drift, we anoint a lead, we ask for org charts. None of this proves there is a little person inside the model. It does show that when you create a persistent team environment, the most human-shaped patterns tend to win.

As mentioned earlier, we are less concerned with those sorts of automated, machine-first workflows (e.g. produce a Credit Score based on certain data, behavior traits, financial history) and instead actual Human-Ai Symbiosis where we act in concert each step of the way in project. It’s slower, and requires copy/paste and other methods to ensure we are all involved in decision making, and planning projects and desired outcomes, but it also means we’re emulating (as least as best we can) a next generation team environment, one that was we are learning may have more additional surprises along the way.