What started as a frustrated copy-paste of server error logs has evolved into a full-fledged AI research lab. This is the story of how Stark Insider transformed from struggling with basic server maintenance to building custom AI infrastructure. And what we learned along the way.

The IT Dungeon Gets a Makeover

For nearly two decades, Stark Insider has run on a Google Cloud VM hosting an Ubuntu server. It’s been our foundation, but also our nemesis. “I used to call it the IT Dungeon,” explains Clinton Stark, “because I was terrified of working on it. I knew nothing about server management, and keeping it running was frustrating.”

That anxiety defined the pre-AI era. Problems meant diving into Stack Overflow, hunting through random blog posts, and hoping a solution from 2014 might still work. More often than not, it didn’t.

Loni Stark was already deep into AI by summer 2024. Her Harvard coursework had exposed her not just to AI tools, but to an entire ecosystem of modern platforms that younger developers took for granted. She was building products with LLMs at Adobe, experimenting with AI in her art studio, and also feeding drawings into ChatGPT for 3D rendering, using it to iterate on designs in ways that would have taken weeks manually.

Her killer app was creative: AI as a collaborative studio partner.

But one day this past June, while Loni was attending a clay and steel sculpture workshop at Anderson Ranch Arts Center in Colorado, Clinton tried something different. Faced with massive error logs, he copied and pasted them directly into ChatGPT.

“It said, ‘Oh, I see the problem. Do this, do that, do the other,'” Clinton recalls. “And it worked. I was suddenly having a conversation with ChatGPT about solving problems, changing configuration files, running commands. The server got more stable than it had been in years.”

The IT Dungeon had been conquered. The name no longer felt appropriate.

When Robots Come Knocking

The server stabilization was just the beginning. Loni posed an intriguing question: Were LLMs crawling Stark Insider? Were chatbots indexing our content? For her, Stark Insider has always been a sandbox for experiencing firsthand the macro changes happening across the web.

Scripts written by Claude and ChatGPT revealed the answer: yes, and in increasing numbers. Every visitor — human or bot — leaves a timestamped entry in access logs. The analysis revealed a fascinating ecosystem of web crawlers, from the “blue chip” bots like Claude and ChatGPT to a constantly evolving cast of startup crawlers appearing weekly.

But Loni pushed the analysis further. “Don’t just look at what they call themselves,” she insisted. “Look at what they’re actually doing. What content they’re accessing, what patterns they’re following. Companies can name their bots whatever they want. The name isn’t a reliable indicator of intent.”

This behavioral analysis led to what we called the “Good Bot, Bad Bot” framework. We categorized crawlers not by their self-identification but by their observable actions. Good bots provide attribution, driving traffic back to Stark Insider when they surface our content. Claude from Anthropic proved particularly good at this, always citing sources and providing clickable links. Bad bots? They scrape without attribution, taking content with unclear intentions revealed only through their access patterns.

Managing this required implementing Fail2Ban, a jail system for misbehaving bots. Rules automatically detect suspicious behavior — like hitting the site 100 times in 10 seconds — and impose timeouts, escalating to permanent bans for repeat offenders (or “recidivists,” as the French term goes).

“I would never have been able to implement bot management without AI,” Clinton emphasizes. The system now runs 1,800 lines of custom code, far beyond what would have been feasible before.

The Hidden Web: Talking to Machines

As bot traffic increased, we realized something profound: the web is becoming a conversation between machines. Human-readable content is just the surface. Beneath it, a parallel layer of structured data — formatted in JSON — speaks directly to bots.

We began adding Schema.org markup behind our articles. When a bot visits a film review, it now sees not just the prose but structured information: movie title, director, actors, rating, all in machine-readable format. Organization information explains that “Stark Insider was founded in 2005 by Clinton and Loni Stark, covering technology and arts.”

This wasn’t just about SEO. “It changes your content strategy,” Loni observes. “Having to create structured data makes you ask: do I actually have this essential information? It creates discipline.”

The entire system was codified into a custom WordPress plugin called Stark Insider Toolkit which included about 2,000 lines of code written collaboratively with AI, featuring short-codes that automatically generate structured data as we write.

The Explosion of Ambition

An interesting pattern emerged: more code was being written, but not just to replace existing functionality. The codebase was growing because we were building things that would have been unthinkable before.

“I realized you weren’t writing AI code to replace 18% of your existing 100 lines,” Loni notes. “You were writing 200 lines because you were creating entirely new automation and capabilities.”

ChatGPT and Claude proved to be enthusiastic enablers, always ready to suggest another feature, another refactoring, another optimization. “They love writing scripts,” Clinton laughs. “They’re technical. They always want to solve problems.”

This led to both breakthroughs and lessons about over-engineering. A 1,800-line Python backup script that uploads to Backblaze and Google Cloud Storage daily? Essential. The LLMs’ suggestion to refactor it into modular subdirectories? Unnecessary complexity for code that already worked.

The $200/Month Education

Working with thousands of lines of code meant bumping into context window limits (the amount of text an LLM can process at once). Copy, paste, analyze, receive corrections, copy, paste again. The iterative cycle consumed tokens rapidly.

“Before we knew it, we were on a Claude Max Pro plan at $200 a month,” Clinton says. “But we needed that processing power.”

Both viewed it as an education fund. “You could read about modularity, refactoring, context windows,” Loni reflects. “But it’s different to experience them. The fast iterations meant you were getting visceral understanding.”

The learning methodology evolved: cross-reference between multiple LLMs. ChatGPT’s recommendations get fed into Claude for a second opinion, and vice versa. For SEO questions, Google’s Gemini became the trusted authority — they built the search engine, after all.

“I didn’t take a course. I didn’t read a book,” Clinton notes. “But I learned that taking output from ChatGPT and asking Claude ‘What do you think?’ takes your game to another level.”

Three Ambitious Projects

By late summer, we weren’t just maintaining servers. We were envisioning entirely new applications:

StarkMind: A RAG (Retrieval Augmented Generation) system containing all 8,000 Stark Insider articles. The goal: search our archive, identify trends, maintain our editorial voice, and serve as a writing assistant that understands our style.

ClintClone: An AI version trained on photographs, childhood memories, writing samples, and life experiences. Part digital twin, part writing assistant, part home automation interface; because Clinton has so thoroughly automated the house that Loni jokes she won’t be able to operate it without him.

Personal AI Brain: A secure, local LLM for analyzing financial records, health documents, and personal information too sensitive for public cloud services.

What we didn’t realize: these weren’t just creative ideas. Each aligned with established AI techniques. RAG for the document system. LoRA (Low-Rank Adaptation) fine-tuning for the clone. Local deployment for privacy.

“You weren’t starting with technology,” Clinton observes. “You started with the end use case, the value. Then we discovered the technology had names and was actually commonly used in enterprise.”

Building the Lab

These projects had one problem: they needed serious computational power. Clinton’s video editing workstation from 2019 couldn’t handle running LLMs. OpenAI’s newly released 20-billion and 120-billion parameter open-weight models brought it to a crawl.

The decision: build a proper AI research lab.

“We looked at it like an education fund,” Loni explains. Research-grade infrastructure requires more than raw power — it demands reproducibility, documentation standards, and proper software architecture. ChatGPT, Claude, and Gemini helped spec out the requirements.

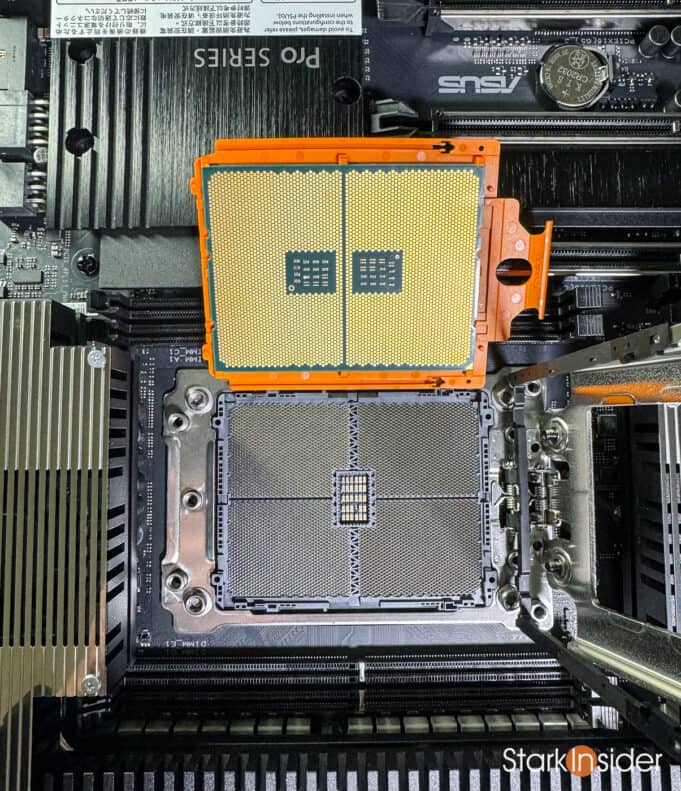

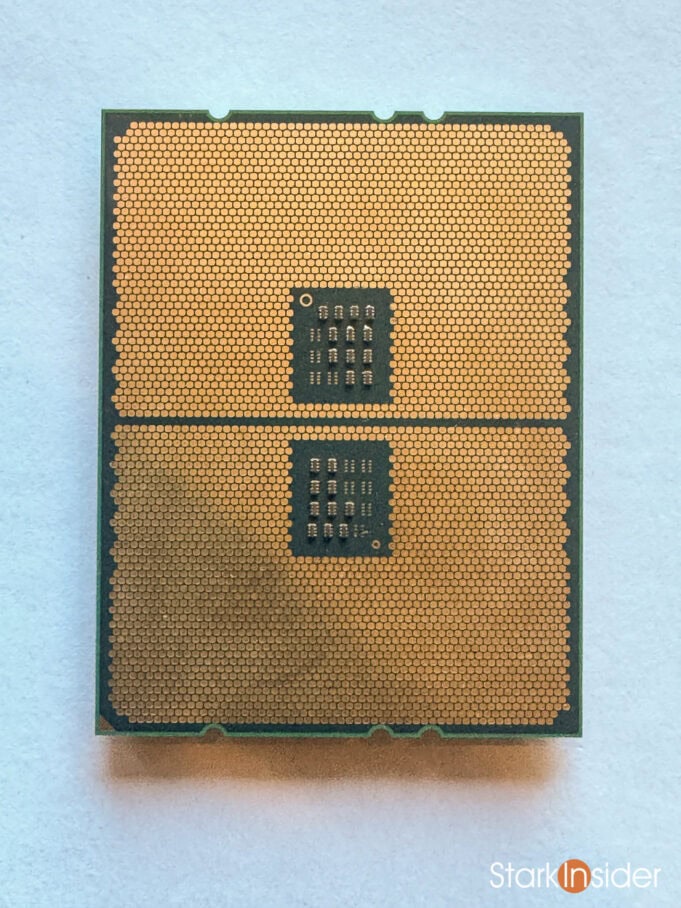

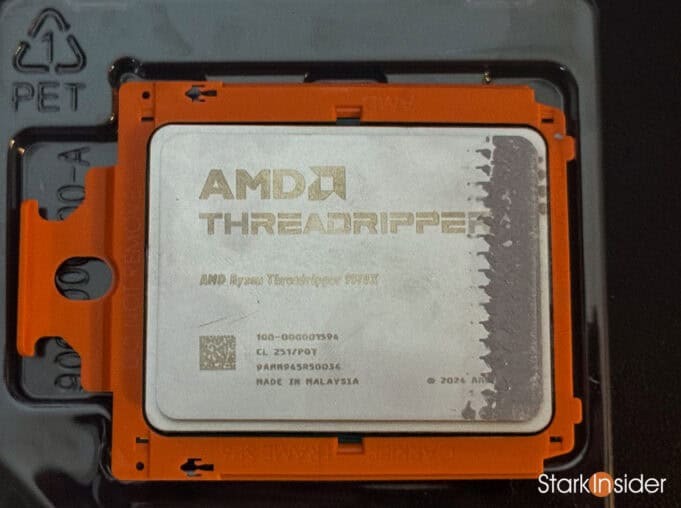

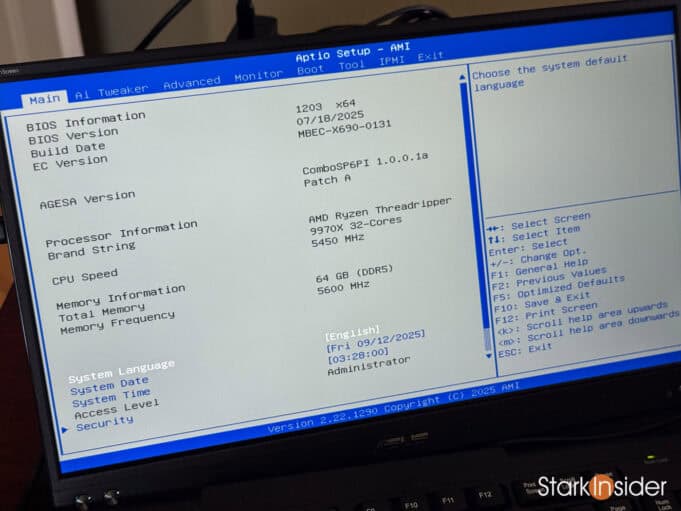

The build centered on a Threadripper CPU — Clinton had always dreamed of owning one. But the critical component was the GPU, specifically the VRAM (video RAM) that sits on it.

“I never connected the G in GPU with graphics card until this project,” Loni admits. The revelation: VRAM is what makes AI possible. The RTX 5090 they selected has 32GB of VRAM running at 1.8 terabytes per second — fast enough to handle the billions of mathematical computations required for LLM inference. Enterprise Nvidia H100 cards offer 80GB, but at dramatically higher cost.

The Counterfeit Crisis

Parts began arriving from Amazon and B&H Photo. The 30-day return window created pressure — if components arrived weeks apart, there wouldn’t be time to test everything before the deadline expired.

Clinton’s excitement led to a rookie mistake: instead of doing a minimal build to test each component, he assembled everything at once. The system wouldn’t POST (Power On Self Test). Days of troubleshooting followed.

“I was using AI to debug,” Clinton recalls. “Process of elimination, checking the power supply, the motherboard, everything.”

Then he noticed something odd. Physically examining the Threadripper processor — model 9970X, the latest Zen 5 generation — he compared it to images in Tom’s Hardware reviews. The pin configuration looked different. His chip had two arrays of pins instead of one.

The realization hit: he was holding a counterfeit.

“Someone bought it from Amazon, swapped it with their old Zen 4 chip, etched the 9970X model number on top using a laser engraver, and returned it,” Clinton explains. “Amazon’s return staff checked the top marking, saw 9970X, and put it back in circulation without checking the bottom.”

What Loni found revealing: ChatGPT and Claude both insisted the chip was correct. Only Perplexity flagged the problem.

“This is about knowledge cutoff dates,” she explains. “ChatGPT and Claude’s training ended when Zen 4 was current. They didn’t know Zen 5 existed. Perplexity does real-time search, so it caught what the others missed. That’s why we cross-reference between multiple LLMs — they have different strengths.”

The chip was returned. A genuine replacement arrived. The system booted.

Software Architecture for a Two-Person Lab

With hardware running, Clinton installed Ubuntu as the base OS and began configuring the research environment. But documentation became the unexpected challenge… and opportunity.

Using Claude’s Notion connector, he automated documentation creation. “I didn’t write these docs myself,” Clinton explains. “Claude created tables listing our tools, our architecture decisions, our build process.”

The documents became a “single source of truth” — persistent context that any LLM could reference. ChatGPT later gained read access to Notion, allowing it to query the same knowledge base.

“You have to tell them we’re a two-person research lab operating from home,” Loni emphasizes. “Otherwise they recommend enterprise-level solutions. Kubernetes, multi-node clusters, complex security frameworks we don’t need.”

Context shapes everything. The right context makes AI useful. The wrong context generates solutions for problems you don’t have.

Heat, Costs, and Reality

The server runs water-cooled with a radiator and six-fan array. “Like an ICE car,” as Clinton puts it. AI estimates suggest $1,000-1,200 in annual electricity costs if run 24/7, though actual costs depend on workload.

Heat generation proved notable. The storage room where Vertigo sits can rise five degrees when under load. It’s a visceral reminder of something abstract: data center water consumption for cooling isn’t just an enterprise problem, it also scales down to home labs.

What We’re Building Next

With Vertigo operational, the three projects move from concept to implementation:

StarkMind will enable trend analysis across two decades of content, provide voice-matched writing assistance, and offer semantic search that understands context and editorial style rather than just keywords.

ClintClone preserves partnership knowledge and capabilities. “Everyone needs a Clint in their life,” Loni jokes, but the serious motivation is clear: creating a collaborative partner that can help with creative projects and home automation even when Clinton’s traveling.

Personal AI Brain addresses a critical gap: analyzing sensitive financial and health documents without exposing them to public cloud services.

Tools are being evaluated: an open-source Git system for version control, various frameworks for model training, and infrastructure for collaborative development. But there’s uncertainty too.

“We built this massive system and we’re going to learn what to do with it,” Loni reflects. “You can’t fully know where AI is going by just reading about it. You need to immerse yourself.”

The combination matters: hands-on experimentation paired with structured learning. We’re both working through the O’Reilly AI Engineering book (by Chip Huyen, highly recommended). Theory without practice is abstract. Practice without theory is chaotic. Together, they create understanding.

Beyond Productivity: Becoming Bionic

After three months, one insight dominates: this isn’t about productivity multiples.

“It’s not that I’m two times or even 100 times more productive,” Clinton reflects. “It’s that I can do things I couldn’t do as a normal human being. I couldn’t write 1,800 lines of backup script. I couldn’t build a custom WordPress plugin. I couldn’t configure an AI research server. But now I can.”

The word Clinton uses is “bionic” as in augmented beyond natural human capability.

Loni frames it differently: companionship in the work. “It’s not lonely anymore. You have a team that tries to understand your context. Even when they get it wrong, the fact that they try makes you feel less alone than traditional search ever did.”

But there’s a discipline required. LLMs don’t tire. They never suggest lunch breaks. They generate endlessly, often enthusiastically pushing toward over-engineering.

“You can spend all day generating ideas and getting things 70% complete,” Loni observes. “But unless you bring a few things to finality, you end up with nothing. The human has to orchestrate, moderate, decide.”

They’ve become, in a sense, moderators of AI conversations. Using multiple LLMs simultaneously means bouncing ideas between them: “Okay, what do you think? Now you, what’s your take?”

“It’s like a therapy session,” Loni laughs.

The IT Dungeon Is Dead

The transformation is complete. What started as copy-pasting error logs into ChatGPT has become a serious, research-grade infrastructure capable of running billion-parameter models locally, managing bot ecosystems, generating structured data for machine-readable web, and pursuing projects that seemed impossible months ago.

The IT Dungeon and that source of fear and frustration no longer exists. In its place: Vertigo, a home AI lab named after the journey of transformation, representing both the dizzying pace of change and the new heights now accessible.

“AI has leveled the playing field,” Clinton says simply. “I’m no longer afraid to walk down into the dungeon.”

For Loni, the journey confirmed what she’d observed in her enterprise work: “AI isn’t just a productivity tool. It changes what you can imagine building. The question isn’t ‘how much faster can I do this?’ It’s ‘what becomes possible that wasn’t before?'”

This is Part One of a multi-part series documenting Stark Insider’s AI journey. Next: building the AI software stack, training custom models, implementing RAG and LoRA systems, and discovering what happens when you try to clone a person.