Anthropic today announced its flagship chatbot, Claude Sonnet 4, now supports up to 1 million tokens of context via the API. In the announcement post, Anthropic noted that this represents a 5x increase of context.

For now it’s not clear if this will find its way to the actual chat interface. But developers and others interested in processing long codebases while retaining context for extended sessions and projects, this will be significant news.

The enhanced context window allows users to process entire codebases containing “over 75,000 lines of code” or analyze dozens of research papers simultaneously without losing track of relationships and dependencies across the material. For developers and researchers working with large, complex datasets, this represents a significant leap in capability.

You can use the extra capacity not only via the Anthropic API, but also with Amazon Bedrock and with Google’s Cloud Vertex “coming soon.”

Unlocking New Use Cases

The expanded context opens doors to several demanding applications that were previously impractical. Large-scale code analysis becomes feasible, enabling Claude to understand complete project architectures, identify cross-file dependencies, and suggest system-wide improvements. Document synthesis workflows can now process extensive collections of legal contracts, research papers, or technical specifications while maintaining awareness of relationships across hundreds of documents.

“Claude Sonnet 4 remains our go-to model for code generation workflows, consistently outperforming other leading models in production,” said Eric Simons, CEO and Co-founder of Bolt.new, which has integrated Claude into its browser-based development platform. “With the 1M context window, developers can now work on significantly larger projects while maintaining the high accuracy we need for real-world coding.”

Pricing: The Cost of Scale

This expanded capability doesn’t come without trade-offs. Anthropic has implemented a tiered pricing structure that reflects the increased computational demands of processing massive context windows.

After you hit 200K in prompts, the input per token doubles, and the output jumps about 50%, so that will be a consideration (see the table below).

Anthropic API Pricing:

| Input | Output | |

|---|---|---|

| Prompts ≤ 200K | $3 / MTok | $15 / MTok |

| Prompts > 200K | $6 / MTok | $22.50 / MTok |

Claude Sonnet 4 pricing on the Anthropic API

For organizations planning to leverage the full million-token capacity regularly, this pricing structure demands careful cost-benefit analysis. A single large codebase analysis or comprehensive document synthesis session could quickly become expensive. However, Anthropic offers some relief through prompt caching and batch processing, which can reduce costs by up to 50% for repeated operations.

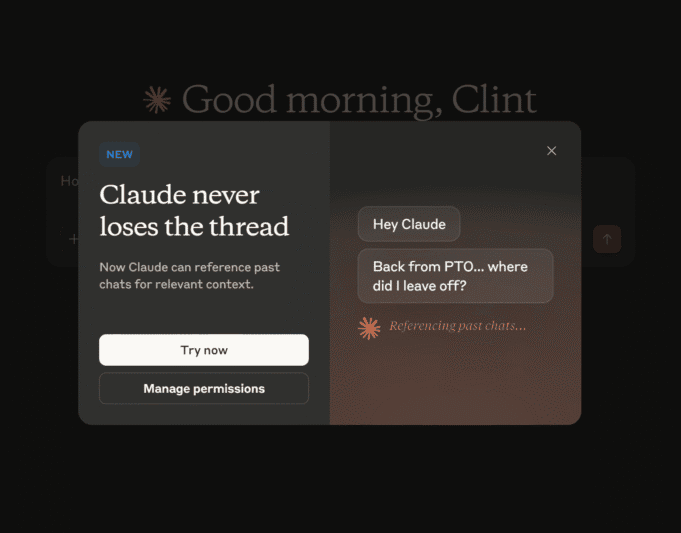

Plus: Claude never loses the thread

In addition, Anthropic revealed a form of memory. That means you can continue previous conversations and reference past chats. If you open claude.ai in your browser today, you’ll likely be greeted by this pop-up:

This is welcome news. As a Claude user, it’s always frustrating when the usage limit is tripped. The thread is then shut down. Often this occurs right in the most important part of a task. For instance, I was working on de-bugging an 1,800 line script. Claude had its a-ha moment. A light bulb went off, and the solution was on its way (in the artifact on the right side panel). Then, boom. Conversation over. Usage reached. This is the end. A real cliff hanger. Like other users, I then copy and paste key context and start a new thread, with fingers crossed that Claude would remember that bug fix. Sometimes the thread hopping works, other times not.

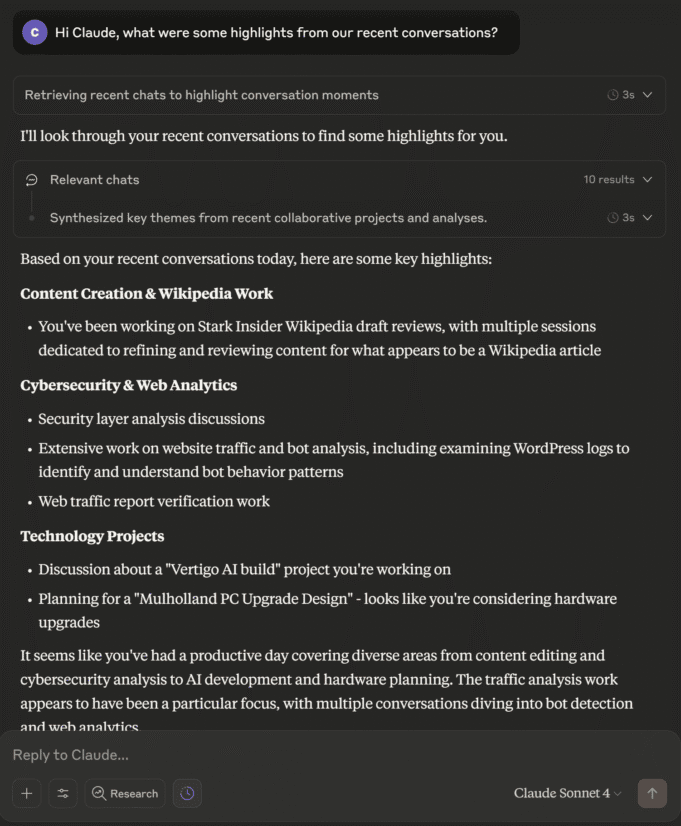

I’ve just started working with the feature so it’s too early to tell how well it will work. Example:

However, this potentially closes a gap between Claude and GPT-5, the latter of which has had memory for quite some time (you can even manage the snippets as needed in settings).

“What was once impossible is now reality,” noted Sean Ward, CEO of London-based iGent AI, whose Maestro software engineering agent leverages Claude’s capabilities. “This leap unlocks true production-scale engineering—multi-day sessions on real-world codebases—establishing a new paradigm in agentic software engineering.”

The AI Arms Race Intensifies

Recent news and headlines:

- Google is spending $75 billion on AI for 2025

- Meta has committed $60-65 billion

- Microsoft plans $80 billion AI spend

- Pentagon awards up to $200 million in AI related contracts (to OpenAI, Google, Antrhopic, xAI)

- OpenAI’s GPT-5 launch

- Meta’s Llama API is now available (with web interface as well)

The announcement comes amid a frantic pace of AI development. OpenAI recently released open-weight variants of its models (gpt-oss open source LLMs) and unveiled GPT-5, while multiple companies race to establish dominance in the rapidly evolving landscape.

We are definitely in the Wild West or Boom phase of this disruptive wave of innovation.

Venture capital will likely continue to flow by the boatload as young companies seek to become the next Meta, Google, or Amazon.

Expansion will be horizontal during the early days. Then, it’s hard not to envision a shakeout and consolidation phase when AI companies will begin to build full stack platforms; at that point maturation will begin. Too soon to say when that will happen, or what that will even look like as no one could have predicted we’d be where we are so fast already when it comes to LLMs and their impact on consumers and the enterprise.

Getting Started

The enhanced Claude Sonnet 4 is available in public beta through the Anthropic API for customers with Tier 4 and custom rate limits, with broader availability rolling out over the coming weeks.

If you want to dive into the API or learn more: