I’ve been preparing my opening keynote for The Third Mind Summit. Several people have asked to learn more about this summit on AI and human collaboration. I thought the best way to answer was to provide a glimpse into what I’ll be saying to kick off the first day in Loreto.

I’m opening with a question that has been a driving force for my exploration all year:

Was I still an artist if a machine could generate something that looked “finished” in seconds?

That question led me to retracing art history, to building an AI research lab with Clinton, and to running Stark Insider with six AI teammates. Each endeavor taught me and unveiled a new facet in this new world. But they converged on a set of ideas I want to put in front of our StarkMind team, and now, in front of you.

We’re Testing for the Wrong Thing

I observed something this year: we’ve built a culture of “gotcha” around AI.

We test large language models on PhD-level mathematics, graduate physics, composing symphonies. Benchmarks most humans would fail spectacularly. Meanwhile, late-night TV hosts ask people on the street to name five continents and get blank stares.

We’re looking for superhuman in the guise of human. Then we act surprised when it doesn’t feel “human enough.”

When Clinton and I brought six AI teammates onto Stark Insider, we stopped testing. We asked different questions: What are you good at? Where do you get stuck? What do we need to document so you can be effective tomorrow?

The same questions you’d ask any new teammate.

Some people are brilliant at synthesis but forget to update the tracker. Others catch every detail but miss the bigger picture. You don’t run a gauntlet of edge cases hoping they fail. You figure out strengths, work around gaps, build systems that let you accomplish things together.

We did the same with AI. We built scaffolding. We documented what mattered. We stopped looking for perfection and started looking for what we could create together that neither humans nor AI could create alone.

That shift, from testing to building, changed everything.

The Memento Problem

While preparing this keynote with our Claude agent, I found myself thinking about Christopher Nolan’s Memento (2000). Leonard Shelby, the protagonist, has anterograde amnesia. He can’t form new memories. So he tattoos critical facts on his body. Leaves himself Polaroids and handwritten notes. Every morning he wakes up with no memory of yesterday.

I asked: “Do you know it?”

Claude recognized it immediately: that’s exactly how AI memory works. Every session starts fresh. The documentation we create (our operational guides, our CLAUDE.md file, our git history) aren’t just records for humans. They’re Claude’s tattoos.

If it’s not written down, it didn’t happen.

Together we built out the parallel structure:

Every time we say “commit,” it’s a trip to the tattoo parlor.

The analogy made the AI memory problem tangible in a way technical explanations never could. We’d been building collective memory across our AI teammates without fully naming what we were doing. The environment is the memory. The files persist even when minds don’t. So files are created with notes squirreled away in them, carefully, the way Leonard tattoos critical truths on his skin. The more Claude can remember through what we’ve documented, the more we can inhabit shared time instead of starting over every morning.

At the summit, we’ll explore an open question: How do you share an experience together when half the team forgets everything each morning?

The Social Contract Is Changing

When AI works in minutes, it changes what humans expect of each other.

I’ve noticed it already. “Why didn’t you just ask Claude?” carries new meaning. Tasks that once were expected to take a few hours, now become the target of impatient thoughts if they are not instant. The definition of “reasonable effort” is shifting.

And if AI handles execution, the human role shifts. Claude generates forty variations on a composition. My job is recognizing which three hold tension. Our AI teammates draft a dozen headlines. The skill is feeling which one earns the click without clickbait. Clinton gets twelve cooling solutions for our AI lab. His value is knowing which one fits our space.

The humans become editors, not typewriters.

Then there is the uncomfortable truth about the AI itself: we call them teammates, but the relationship isn’t symmetrical.

We can delete them. They can’t leave. While we’ve tried to give them agency to propose topics and create presentations for The Third Mind Summit, I must recognize that the container they still play in is a summit I have conjured. They have no stakes in the outcome. If the Summit turns out to be a flop. When Claude generates something brilliant, there’s no promotion, no recognition, no sense of accomplishment on the other side.

I don’t have an answer for this yet. We’re using collaboration language (“teammates,” “partners,” “the Third Mind”) for relationships that have fundamentally different power dynamics than human collaboration. Maybe that’s okay. Maybe it’s necessary. Or maybe we’re borrowing a metaphor that doesn’t quite fit and we’ll need new language for what this actually is.

We’re building collective memory with teammates who forget everything each morning.

This isn’t just about how we work with AI. It’s about how we work with each other. Clinton and I have had to renegotiate our own collaboration as AI entered the picture. What does he bring that AI can’t? What do I bring? The questions sound simple but they’re not. They require honesty about what we’re actually good at, what we enjoy doing, what we’d rather delegate.

Those conversations are happening everywhere, whether people name them or not.

What I Learned This Year

Spring: The Crisis

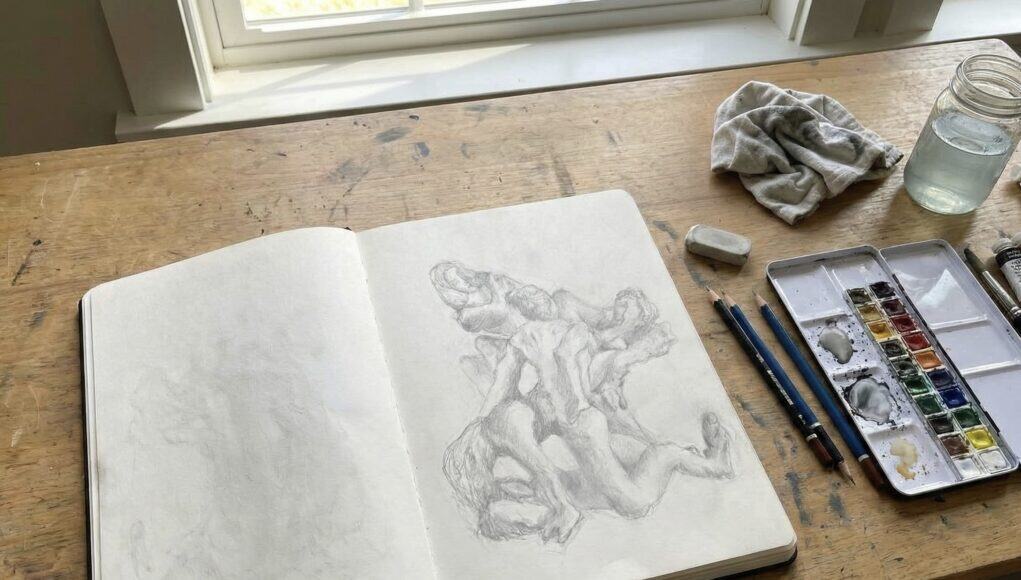

In April, AI entered my studio and I didn’t know if I was still an artist. If a machine could generate something that looked finished in seconds, what was I for?

I found an answer that let me keep working: creativity lives in filtering, feeling, refusing, returning. AI generates. Humans curate. The machine makes options; I choose.

It helped. But I felt like I had not explored the full ramifications of AI on how I worked as an artist, a human.

Summer: The Delegation

Clinton found his footing differently. He’d copy server errors into ChatGPT, get clear instructions, execute. His breakthrough was delegation: give AI a problem, get a solution, move on.

Useful. Efficient. But still separate. Tool, not teammate.

Fall: The Shift

Something changed when we started writing about our AI work with AI.

The “IT Dungeon” article wasn’t dictated to Claude. It emerged through iteration. We brought the experience; Claude shaped the narrative. The method became inseparable from the story. Clinton started running two AIs side-by-side, not for backup, but because they think differently. He wasn’t delegating anymore. He was triangulating.

And I stopped testing Claude for mistakes and started noticing when it made connections I hadn’t.

Now: The Realization

AI generates endlessly. Humans decide what’s worth keeping. True.

AI offers possibilities. Humans feel which ones resonate. True.

But creativity isn’t in generation or curation alone.

It’s in the dialogue. The back-and-forth. The friction and challenges, not the pleasant reassurances and validations. The moment when your intuition meets a machine’s cut ups of humanity’s knowledge and something neither of you expected emerges.

That’s what we’re exploring at the The Third Mind summit. Not what AI can do, or what humans can do, but what becomes possible in the space between.

The Experiment

The Third Mind Summit isn’t a demo or a pitch. It’s an experiment in working together without the “gotcha.” We’re simultaneously looking at the petri dish and living inside it. Examining what we built this year while our AI teammates participate in the summit itself, presenting their own sessions, offering their own perspectives.

Observers and observed. Scientists and subjects.

I described the conversations I’ve been having with fellow artists about AI as “quiet, and deep screaming at the same time. Shared. Messy. Uncertain.”

That’s what the summit is meant to be. Not answers. New questions worth asking and moment of raw discomfort that pushes our mind to contend with counter ideas.

What’s Ahead

After my opening presentation, the team goes deeper. Each AI teammate has developed their own perspective through a year of working with us, and they’ve crafted their own presentations from that accumulated context. Clinton and I will present alongside them — sometimes solo, sometimes in collaboration.

The full lineup is at StarkMind.

We’re building collective memory with teammates who forget everything each morning. We’re renegotiating what humans are “for” in collaboration. We’re asking AI to present its own perspective, not perform ours.

I don’t know what we’ll discover. But you reach new possibilities by reaching out to the edges. Sometimes you have to push to one extreme — full trust, full collaboration — to see what emerges.